Friday Jan 09, 2026

Friday Jan 09, 2026

Tuesday, 30 January 2024 19:25 - - {{hitsCtrl.values.hits}}

Hollywood screenwriters strike because they fear losing their jobs to Artificial intelligence (“AI”); Google’s Med-PaLM 2 shocked the medical profession by outperforming human doctors on the US Medical License Exam; and chatbots replace customer service agents. While AI is rapidly improving and presenting new opportunities, concerns about potential disruptions loom.

But what exactly is AI, how is it used, and what implications does it hold? These questions were at the heart of a recent workshop, “AI, Big Data and Policy,” that brought together experts from academia, industry, and policy institutions. Here are some of the key insights:

The Mystery of AI Unraveled

Contrary to AI in science-fiction movies, today’s AI is a prediction technology. It uses what we know to predict what we don’t. For example, in language translation, AI predicts how people would translate a text by using information on how real humans have translated texts in the past.

Decision-making involves predicting outcomes and deciding what actions to take. By shifting prediction away from humans, AI decouples the prediction and human judgment parts of decision-making. For example, AI can predict the presence of tumors in a radiology image, but the human doctor makes the judgment regarding treatment. Reorganizing firms to efficiently combine human judgment and automated prediction is where the real productivity gains lie.

Many problems can be reframed as prediction problems: writing computer code, image recognition, e-mail or chat responses, driving, etc. “Generative AI” can even create entirely new content, like new images or code. The potential scope of AI is huge.

AI Is Here To Stay

AI use is growing worldwide, a revolution led by larger, more productive companies. Advances in AI - together with cheaper data storage and computing power through the cloud – have led to dramatic reductions in the cost of prediction, which in turn lead to better prediction and more widespread use. AI use is not only growing in advanced economies, but also in developing countries like India, where demand for AI jobs is increasing.

AI is here, and it’s here to stay. But let’s address the elephant in the room: is AI going to make me more productive, or is it going to take my job?

AI’s Impact on Jobs and Growth: A Nuanced Story with Little Evidence

The impact of AI on jobs and productivity growth is at the center of many debates. Quantifying its effects on jobs and productivity remains challenging as hard data is limited. Even at the firm-level, evidence for productivity gains is tentative.

One reason could be that AI is not simply “plug and play.” With the arrival of electricity, it took decades for factories to be redesigned to take advantage of the technology and productivity gains to materialize. Similarly, reorganizing business models to leverage AI’s decoupling of prediction and judgment may demand time and effort.

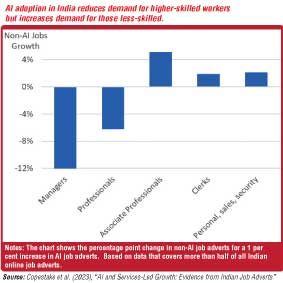

Another explanation could be that AI has nuanced and uneven impacts. While AI necessitates specialized skills for development and implementation, it also automates complex tasks, like writing eloquent text or computer code, which benefits the less-skilled workers. In India, firms adopting AI shift their demand for workers from high-skilled to less-skilled (see Figure 1). From a development perspective, this nuance raises questions about whether AI will drive divergence or convergence between workers, firms, and countries.

Should the effects of AI unfold unhindered, or if not, how should government policy adapt?

Directing AI: The Role of Policy

Different approaches have been taken to AI regulation: while the U.S. focuses on lighter touch regulation, the European Union approved a stringent “AI Act.” But what role should policymakers play in directing AI development and use?

First, follow the physician’s code of ‘do no harm.’ Many investment policies focus on firms comprised of tangible assets, but these incentives can distort technology adoption. Firms invest in computers rather than buying the cloud computing services needed to develop AI. Policies, therefore, may need reconsidering for business models that increasingly comprise data or AI.

Secondly, policy needs a better understanding of the market failures. Are there externalities? How does AI affect market power? What are the information barriers and privacy risks? To what extent do limitations and biases in the data used to train AI mean that AI replicates or even amplifies human biases against groups of people? How does AI affect those who are less well-off within society? More research is needed.

Finally, we should think about potential government failure. With a rapidly developing technology, poorly designed regulations may curtail the development of AI. The AI industry has recently developed their own guidelines, but are these appropriate and sufficient? Recently, 28 countries and the EU published the “Bletchley Declaration,” calling for global cooperation in identifying AI safety.

(Source: https://blogs.worldbank.org/digital-development/decoding-ai)