Monday Feb 16, 2026

Monday Feb 16, 2026

Wednesday, 7 August 2019 00:00 - - {{hitsCtrl.values.hits}}

Early man used sticks and tree branches. Then, animal bones, stones, rocks and metal. Later, gun powder added fuel to the fire, literally. Nuclear and chemical weapons proved that humans will not hold back the desire for power by killing mercilessly.

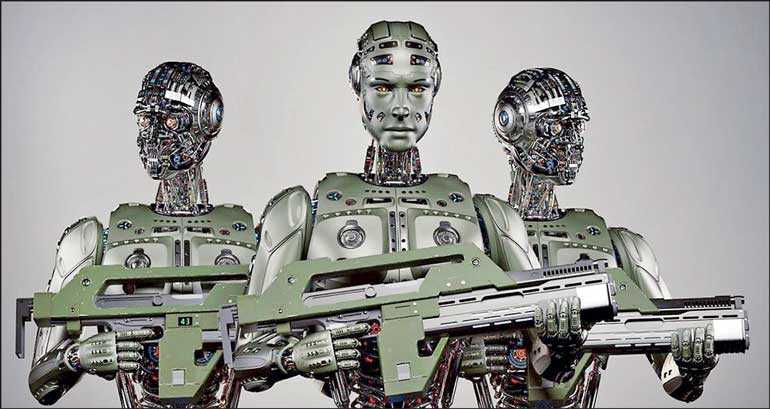

Right now, humans are busy applying sophisticated knowledge and the latest available technology to control and gain more power. Autonomous weapons were once science fiction, but not anymore.

What are autonomous weapons and what drives them?

Weapons operating without meaningful human intervention can be described as autonomous weapons. An autonomous weapon selects targets and launches an attack using the ‘intelligence’ it possesses, without human control. Where does this intelligence come from? This non-human intelligence originates from computer science.

The OCED (Organisation for Economic Cooperation and Development) describes Artificial Intelligence (AI) as a “field of computer science focused on systems and machines that behave in intelligent ways”.

Going deeper, it can be seen that afield of Artificial Intelligence, called Machine Learning (ML) exists: “ML is concerned with computer based systems of algorithms and statistical models that are designed to learn autonomously, without explicit instructions.”

Exploring further bring us to Deep Learning (DL). OECD goes on to explain that “DL is a form of ML that classifies centres input data based on a multi-step process of learning from prior examples.”

The weapons industry is driven by the military-industrial complex. The latest technology is absorbed to be used in weapons systems. The lovechild of the marriage between AI and the arms industry is autonomous weapons. The use of autonomous weapons seems imminent and just a matter of time. The struggle to achieve world supremacy by the super powers drives the military-industrial complex to produce new and improved weapons systems.

The production and use of autonomous weapons might seem appealing to the general public when politicians and authorities introduce it as a way to minimise the cost of human lives lost at war. When it is introduced as a way to counter terrorism without sacrificing human soldiers and troops. Going further, man is trying to conquer the Moon and Mars. This will also be a good justification for investing in autonomous weapons: to protect humans from unknown enemies.

Implications of the use of autonomous weapons

Imagine sending an autonomous drone weapon to the Stone Age. The instant killing machine will be hailed as the new god. It won’t be too different now. Autonomous weapons have the potential to undermine the value assigned to human lives. Its abilities can easily overpower human capabilities by overriding human agency. Numerous Hollywood movies have given us a sneak-peek of a scary future where machines have subjugated mankind.

The struggle to achieve world supremacy by the countries identified as super powers will ensure that the military-industrial complex will have enough funding for Research and Development (R&D) of autonomous weapons.

According to the Campaign to Stop Killer Robots: “Fully autonomous weapons would decide who lives and dies, without further human intervention, which crosses a moral threshold. As machines, they would lack the inherently human characteristics such as compassion that are necessary to make complex ethical choices.”

A chilling real-life incident was the alleged attempted assassination of President Nicolás Maduroof Venezuela on 4 August 2018. Two drones, one in the vicinity of where the President was addressing a military parade, exploded. Seven soldiers were injured in the incident. These drones were later found to be commercial ones with C4 plastic explosives attached to them. This was not a case of a successful attack by a fully autonomous weapon. But the incident is sufficient enough to understand the implications of future autonomous weapons.

Closer to home, the unfortunate Easter Sunday incident in April of this year, which claimed the lives of about 250 innocent civilians in Sri Lanka, was carried out by humans. Imagine the devastation if this was caused by autonomous weapons? This incident also illustrates another implication of autonomous weapons.

The country’s system has still been unable to hold any individuals or groups responsible and accountable for this heinous crime. One could argue that this reflects the incompetence of the current system, since the attack was carried out by organised radical human terrorists. Humans can be traced and held accountable. But not autonomous weapons.

Accountability is a major issue for autonomous weapons. Let’s take a simple example. If a pet dog attacks someone, the owner can be held responsible. The dog-attack victim can be paid a compensation, including medical expenses, and if the dog is mad, it can be put down.

The Campaign to Stop Killer Robots states, “It’s unclear who, if anyone, could be held responsible for unlawful acts caused by a fully autonomous weapon: the programmer, manufacturer, commander, and machine itself. This accountability gap would make it is difficult to ensure justice, especially for victims.”

The legal system and current laws are drafted by humans to prosecute humans who commit crimes against other humans. Not for robots which kill humans. International Conventions, such as the Geneva Convention, urge warring factions to balance military necessities and humanitarian interests. But, again these were established for wars in which humans make the decisions.

It is also dangerous to assume that only organised militaries would have access and use to autonomous weapons. What happened when terrorists develop or takeover autonomous weapons systems? What happens when criminal organisations start using these weapons? Since autonomous weapons are built on computer systems, Hackers could take control of these systems, which could prove to be fatal for human civilisations.

Another alarming aspect is that the use of autonomous weapons is not only foreseen for military battles and wars. Authoritarian regimes can use autonomous weapons for its routine administration.

Referring again to the Campaign to Stop Killer Robots, “Fully autonomous weapons could be used in other circumstances outside of armed conflict, such as in border control and policing.

They could be used to suppress protest and prop-up regimes. Force intended as non-lethal could still cause many deaths.”

Also, technologies underlying autonomous weapons may not be as perfect as they are presented. Some may argue that AI can take the fallible human element out of the equation and help make objective decisions. But, would it have the compassion and ethical reasoning, which humans possess?

Nuclear and chemical weapons are dangerous and inhumane but, we find them being used in certain wars. The use of autonomous weapons will be even more of a nightmare.

How can we stop it?

We must ban the R&D, manufacture and the use of autonomous weapons. Wars should not be fought at all. There’s nothing humane about war. But, if wars are inevitable and do get fought, let’s at least ensure that humans remain at the helm of decision making.

AI should not be abandoned. Instead, it should be developed and used to benefit humanity.

There’re enough humanitarian circumstances for technology to be used and applied to ease the suffering of fellow humans.

Resources:

https://www.stopkillerrobots.org

https://www.oecd-forum.org/

(The writer is a Sustainable Human Development and Innovation Specialist. He is the founder of STAMPEDE SDGs Tech Accelerator, which was established to prevent future inequality created by technology. He can be reached on [email protected])